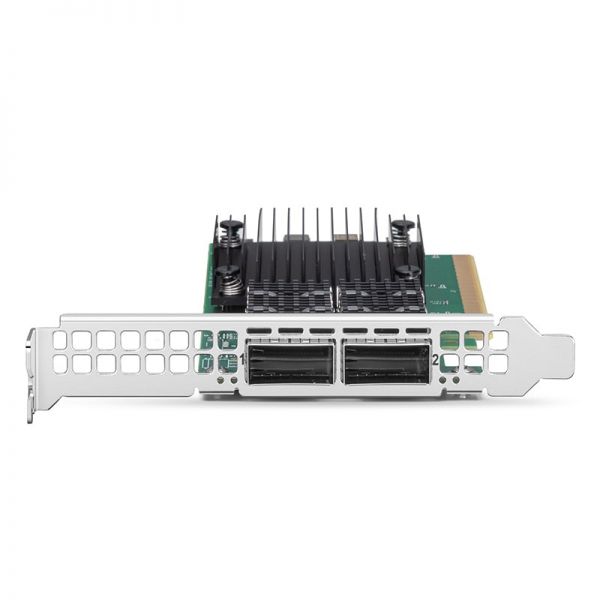

Mellanox ConnectX-6 MCX623436AN-CDAB Dual-Port 100GbE Adapter

Device Type: Network adapter / Ethernet SmartNIC

Ports: Dual-Port QSFP56

Supported Ethernet Speeds: 100/50/40/25/10/1GbE

Host Interface: PCIe 4.0 x16

Controller: Mellanox Technologies ConnectX-6 Dx

Link Rate: 16.0 GT/s

Cabling Type: QSFP56 optical modules or QSFP56 direct-attach copper cables

RDMA: Yes

OS Support: Linux, Windows Server, VMware ESXi (standard ConnectX-6 Dx driver family)

Warranty: 3 months

Overview

The Mellanox ConnectX-6 MCX623436AN-CDAB is a dual-port 100GbE Ethernet SmartNIC designed for high-performance computing, cloud infrastructure, and large-scale data center environments. With QSFP56 connectivity, PCIe 4.0 x16 bandwidth, and broad OS support, it delivers extremely low latency, high throughput, and advanced acceleration features for demanding workloads.

Key Features & Detailed Analysis

1. Dual-Port QSFP56 100GbE Connectivity

- Two high-speed QSFP56 ports supporting 100/50/40/25/10/1GbE

- Compatible with QSFP56 optical modules and QSFP56 DAC cables

- Supports flexible speed scaling for mixed-speed environments

2. PCIe 4.0 x16 Host Interface

- High-bandwidth interface supporting 16.0 GT/s link rate

- Ensures full 100GbE throughput with minimal bottlenecks

- Ideal for modern servers with PCIe Gen4 architecture

3. ConnectX-6 Dx SmartNIC Controller

- Built on Mellanox’s advanced ConnectX-6 Dx architecture

- Provides enhanced performance, low latency, and hardware acceleration

- Supports stateful offloads and secure, high-efficiency packet processing

4. RDMA & Low-Latency Features

- Full support for RDMA (RoCE / RoCE v2)

- Enables ultra-low latency communication for AI/ML clusters, HPC, and storage fabrics

- Optimized for latency-critical workloads

5. Broad OS Compatibility

- Supports Linux distributions, Windows Server, and VMware ESXi

- Uses standard Mellanox ConnectX-6 Dx driver family

- Ensures seamless integration into enterprise and data center ecosystems

6. Cabling Flexibility

- Works with QSFP56 optical modules or QSFP56 direct-attach copper cables

- Suitable for both short-range and long-range deployments

Target Use Cases

1. High-Performance Computing (HPC)

- Low-latency RDMA support for cluster interconnects and scientific workloads

2. AI/ML & GPU Clusters

- Fast data throughput for distributed training and inference pipelines

3. Cloud & Virtualized Data Centers

- SmartNIC acceleration for large-scale virtualized environments

4. High-Speed Storage Networks

- Excellent for NVMe-oF, distributed storage, and iSCSI SANs

5. Enterprise Networking & Large Data Transfers

- Designed for demanding applications requiring sustained 100GbE performance

Summary

Strengths

- Dual QSFP56 ports with support for 100/50/40/25/10/1GbE

- PCIe 4.0 x16 ensures maximum throughput

- Advanced ConnectX-6 Dx SmartNIC architecture

- RDMA for ultra-low latency communications

- Broad OS support for Linux, Windows Server, and VMware ESXi

- Flexible cabling with QSFP56 optics or DAC

Considerations

- Requires QSFP56 modules or DAC cables (not included)

- Needs a PCIe Gen4 x16 slot for optimal performance

- Designed for enterprise, HPC, and cloud workloads, not general office use

Conclusion

The Mellanox ConnectX-6 MCX623436AN-CDAB delivers exceptional 100GbE networking performance with advanced SmartNIC capabilities, RDMA support, and PCIe 4.0 bandwidth. It is a strong choice for data centers, HPC clusters, AI/ML workloads, and high-performance storage environments requiring low latency and high throughput.