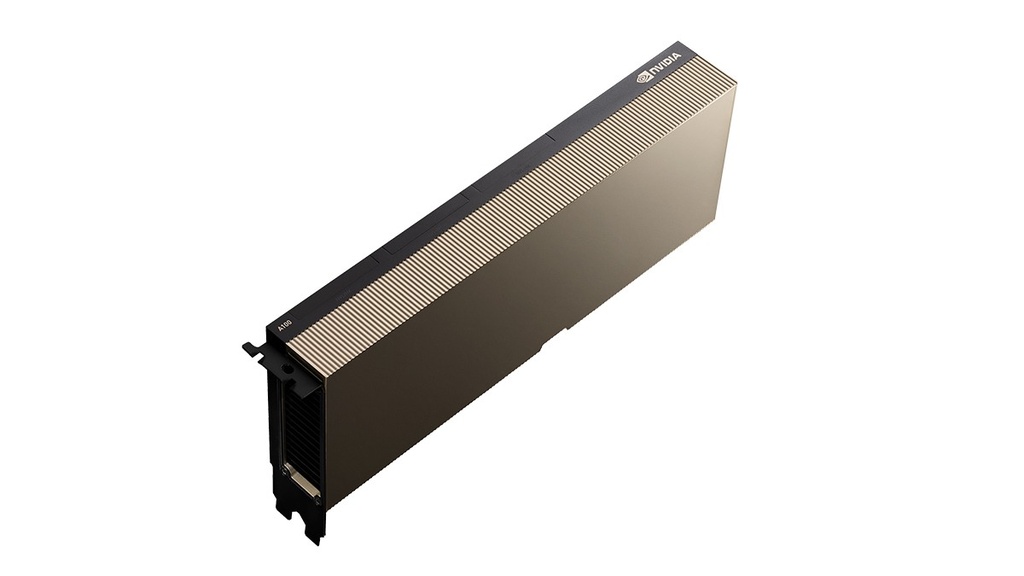

NVIDIA A100 80GB PCIe GPU

NVIDIA A100 80GB PCIe GPU

Brand: NVIDIA

Model: A100 80GB PCIe

Category: Data-Center / AI Compute GPU

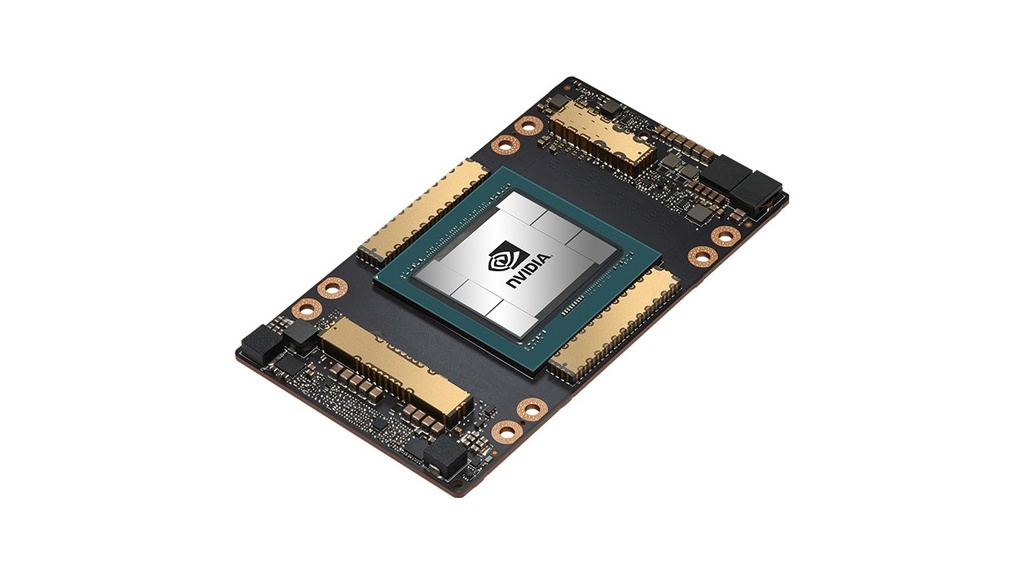

GPU Architecture: NVIDIA Ampere

Memory: 80GB HBM2e

Interface: PCIe Gen4 x16

Performance: Ultra-high performance for AI training, inference, HPC, and data analytics

Form Factor: Full-length PCIe accelerator card

Power Efficiency: High performance-per-watt with Ampere architecture

The NVIDIA A100 80GB PCIe GPU is a data-center class accelerator designed for the most demanding AI, machine learning, deep learning, and high-performance computing workloads. Featuring 80GB of ultra-fast HBM2e memory and PCIe Gen4 connectivity, it delivers exceptional throughput for large models, complex simulations, and data-intensive applications. Ideal for AI training, inference servers, and enterprise compute clusters, the A100 PCIe offers scalability and performance with industry-leading efficiency.

• NVIDIA Ampere architecture for advanced AI and HPC workloads

• Massive 80GB HBM2e memory for large datasets and LLMs

• PCIe Gen4 x16 interface for wide compatibility across servers

• Exceptional AI training, inference, and data analytics performance

• Built-in support for NVIDIA CUDA, Tensor Cores, and NVLink (where applicable)

• Ideal for AI data centers, cloud infrastructure, and enterprise clusters